Emitter Detector Redux

In the first edition of Think Bayes, I presented what I called the Geiger counter problem, which is based on an example in Jaynes, Probability Theory. But I was not satisfied with my solution or the way I explained it, so I cut it from the second edition.

I am re-reading Jaynes now, following the excellent series of videos by Aubrey Clayton, and this problem came back to haunt me. On my second attempt, I have a solution that is much clearer, and I think I can explain it better.

I’ll outline the solution here, but for all of the details, you can read the bonus chapter, or click here to run the notebook on Colab.

The Emitter-Detector Problem

Here’s the example from Jaynes, page 168:

We have a radioactive source … which is emitting particles of some sort … There is a rate p, in particles per second, at which a radioactive nucleus sends particles through our counter; and each particle passing through produces counts at the rate θ. From measuring the number {c1 , c2 , …} of counts in different seconds, what can we say about the numbers {n1 , n2 , …} actually passing through the counter in each second, and what can we say about the strength of the source?

As a model of the source, Jaynes suggests we imagine “N nuclei, each of which has independently the probability r of sending a particle through our counter in any one second”. If N is large and r is small, the number of particles emitted in a given second is well modeled by a Poisson distribution with parameter s=Nr, where s is the strength of the source.

As a model of the sensor, we’ll assume that “each particle passing through the counter has independently the probability ϕ of making a count”. So if we know the actual number of particles, n, and the efficiency of the sensor, ϕ, the distribution of the count is Binomial(n,ϕ).

With that, we are ready to solve the problem. Following Jaynes, I’ll start with a uniform prior for s, over a range of values wide enough to cover the region where the likelihood of the data is non-negligible. To represent distributions, I’ll use the Pmf class from empiricaldist.

ss = np.linspace(0, 350, 101) prior_s = Pmf(1, ss)

For each value of s, the distribution of n is Poisson, so we can form the joint prior of s and n using the poisson function from SciPy. The following function creates a Pandas DataFrame that represents the joint prior.

def make_joint(prior_s, ns):

ss = prior_s.qs

S, N = np.meshgrid(ss, ns)

ps = poisson(S).pmf(N) * prior_s.ps

joint = pd.DataFrame(ps, index=ns, columns=ss)

joint.index.name = 'n'

joint.columns.name = 's'

return joint

The result is a DataFrame with one row for each value of n and one column for each value of s.

To update the prior, we need to compute the likelihood of the data for each pair of parameters. However, in this problem the likelihood of a given count depends only on n, regardless of s, so we only have to compute it once for each value of n. Then we multiply each column in the prior by this array of likelihoods. The following function encapsulates this computation, normalizes the result, and returns the posterior distribution.

def update(joint, phi, c):

ns = joint.index

likelihood = binom(ns, phi).pmf(c)

posterior = joint.multiply(likelihood, axis=0)

normalize(posterior)

return posterior

First update

Let’s test the update function with the first example, on page 178 of Probability Theory:

During the first second,

c1 = 10counts are registered. What can [we] say about the numbern1of particles?

Here’s the update:

c1 = 10 phi = 0.1 posterior = update(joint, phi, c1)

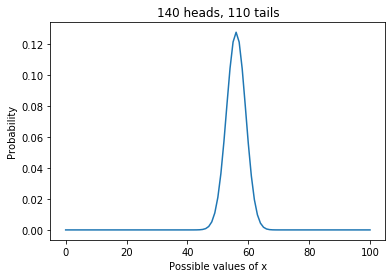

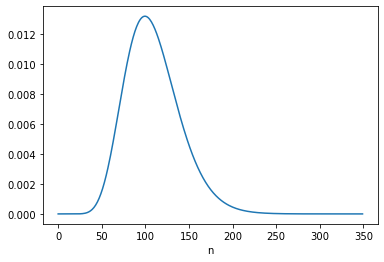

The following figures show the posterior marginal distributions of s and n.

posterior_s = marginal(posterior, 0)

posterior_n = marginal(posterior, 1)

The posterior mean of n is close to 109, which is consistent with Equation 6.116. The MAP is 99, which is one less than the analytic result in Equation 6.113, which is 100. It looks like the posterior probabilities for 99 and 100 are the same, but the floating-point results differ slightly.

Jeffreys prior

Instead of a uniform prior for s, we can use a Jeffreys prior, in which the prior probability for each value of s is proportional to 1/s. This has the advantage of “invariance under certain changes of parameters”, which is “the only correct way to express complete ignorance of a scale parameter.” However, Jaynes suggests that it is not clear “whether s can properly be regarded as a scale parameter in this problem.” Nevertheless, he suggests we try it and see what happens. Here’s the Jeffreys prior for s.

prior_jeff = Pmf(1/ss[1:], ss[1:])

We can use it to compute the joint prior of s and n, and update it with c1.

joint_jeff = make_joint(prior_jeff, ns) posterior_jeff = update(joint_jeff, phi, c1)

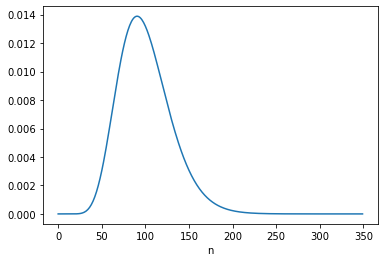

Here’s the marginal posterior distribution of n:

posterior_n = marginal(posterior_jeff, 1)

The posterior mean is close to 100 and the MAP is 91; both are consistent with the results in Equation 6.122.

Robot A

Now we get to what I think is the most interesting part of this example, which is to take into account a second observation under two models of the scenario:

Two robots, [A and B], have different prior information about the source of the particles. The source is hidden in another room which A and B are not allowed to enter. A has no knowledge at all about the source of particles; for all [it] knows, … the other room might be full of little [people] who run back and forth, holding first one radioactive source, then another, up to the exit window. B has one additional qualitative fact: [it] knows that the source is a radioactive sample of long lifetime, in a fixed position.

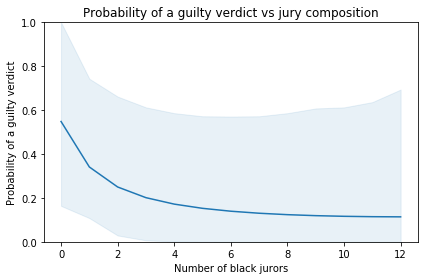

In other words, B has reason to believe that the source strength s is constant from one interval to the next, while A admits the possibility that s is different for each interval. The following figure, from Jaynes, represents these models graphically.

For A, the “different intervals are logically independent”, so the update with c2 = 16 starts with the same prior.

c2 = 16 posterior2 = update(joint, phi, c2)

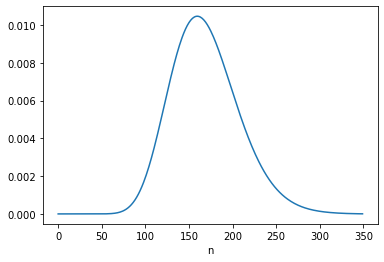

Here’s the posterior marginal distribution of n2.

The posterior mean is close to 169, which is consistent with the result in Equation 6.124. The MAP is 160, which is consistent with Equation 6.123.

Robot B

For B, the “logical situation” is different. If we consider s to be constant, we can – and should! – take the information from the first update into account when we perform the second update. We can do that by using the posterior distribution of s from the first update to form the joint prior for the second update, like this:

joint = make_joint(posterior_s, ns) posterior = update(joint, phi, c2) posterior_n = marginal(posterior, 1)

The posterior mean of n is close to 137.5, which is consistent with Equation 6.134. The MAP is 132, which is one less than the analytic result, 133. But again, there are two values with the same probability except for floating-point errors.

Under B’s model, the data from the first interval updates our belief about s, which influences what we believe about n2.

Going the other way

That might not seem surprising, but there is an additional point Jaynes makes with this example, which is that it also works the other way around: Having seen c2, we have more information about s, which means we can – and should! – go back and reconsider what we concluded about n1.

We can do that by imagining we did the experiments in the opposite order, so

- We’ll start again with a joint prior based on a uniform distribution for s

- Update it based on

c2, - Use the posterior distribution of s to form a new joint prior,

- Update it based on

c1, and - Extract the marginal posterior for

n1.

joint = make_joint(prior_s, ns) posterior = update(joint, phi, c2) posterior_s = marginal(posterior, 0) joint = make_joint(posterior_s, ns) posterior = update(joint, phi, c1) posterior_n2 = marginal(posterior, 1)

The posterior mean is close to 131.5, which is consistent with Equation 6.133. And the MAP is 126, which is one less than the result in Equation 6.132, again due to floating-point error.

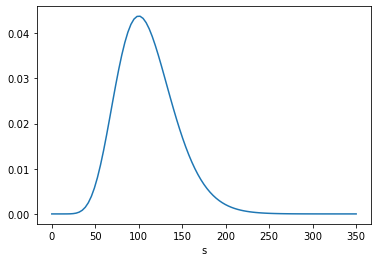

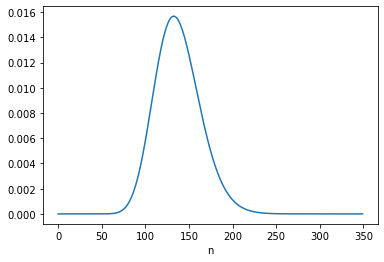

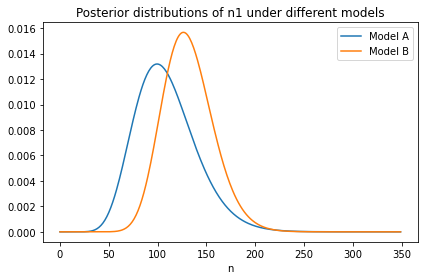

Here’s what the new distribution of n1 looks like compared to the original, which was based on c1 only.

With the additional information from c2:

- We give higher probability to large values of s, so we also give higher probability to large values of

n1, and - The width of the distribution is narrower, which shows that with more information about s, we have more information about

n1.

Discussion

This is one of several examples Jaynes uses to distinguish between “logical and causal dependence.” In this example, causal dependence only goes in the forward direction: “s is the physical cause which partially determines n; and then n in turn is the physical cause which partially determines c”.

Therefore, c1 and c2 are causally independent: if the number of particles counted in one interval is unusually high (or low), that does not cause the number of particles during any other interval to be higher or lower.

But if s is unknown, they are not logically independent. For example, if c1 is lower than expected, that implies that lower values of s are more likely, which implies that lower values of n2 are more likely, which implies that lower values of c2 are more likely.

And, as we’ve seen, it works the other way, too. For example, if c2 is higher than expected, that implies that higher values of s, n1, and c1 are more likely.

If you find the second result more surprising – that is, if you think it’s weird that c2 changes what we believe about n1 – that implies that you are not (yet) distinguishing between logical and causal dependence.