The Lost Chapter

I’m happy to report that Probably Overthinking It is available now in paperback. If you would like a copy, you can order from Bookshop.org and Amazon (affiliate links).

To celebrate, I’m publishing The Lost Chapter — that is, the chapter I cut from the published book. It’s about The Girl Named Florida problem, which might be the most counterintuitive problem in probability — even more than the Monty Hall problem.

When I started writing the book, I thought it would include more puzzles and paradoxes like this, but as the project evolved, it shifted toward real world problems where data help us answer questions and make better decisions. As much as The Girl Named Florida is challenging and puzzling, it doesn’t have much application in the real world.

But it got a new life in the internet recently, so I think this is a good time to publish! The following is an excerpt; you can read the complete chapter here.

The Girl Named Florida

The Monty Hall Problem is famously contentious. People have strong feelings about the answer, and it has probably started more fights than any other problem in probability. But there’s another problem that I think it’s even more counterintuitive – and it has started a good number of fights as well. It’s called The Girl Named Florida.

I’ve written about this problem before, and I’ve demonstrated the correct answer, but I don’t think I really explained why the answer is what it is. That’s what I’ll try to do here.

As far as I have found, the source of the problem is Leonard Mlodinow’s book, The Drunkard’s Walk, which pose the question like this:

In a family with two children, what are the chances, if one of the children is a girl named Florida, that both children are girls?

If you have not encountered this problem before, your first thought is probably that the girl’s name is irrelevant – but it’s not. In fact, the answer depends on how common the name is.

If you feel like that can’t possibly be right, you are not alone. Solving this puzzle requires conditional probability, which is one of the most counterintuitive areas of probability. So I suggest we approach it slowly – like we’re defusing a bomb.

We’ll start with two problems involving coins and dice, where the probabilities are relatively simple. These examples demonstrate three principles that will help when things get strange:

- It is not always clear when the condition in a conditional probability is relevant, and our intuition can be unreliable.

- A reliable way to compute conditional probabilities is to enumerate equally likely possibilities and count.

- If someone does something rare, it is likely that they made more than one attempt.

Then, finally, we’ll solve The Girl Named Florida.

Tossing Coins

Let’s warm up with two problems related to coins and dice.

We’ll assume that coins are fair, so the probability of getting heads or tails is 1/2. And the outcome of one coin toss does not affect another, so even if the coin comes up heads ten times, the probability of heads on the next toss is 1/2.

Now, suppose I toss a coin twice where I can see the outcome and you can’t. I tell you that I got heads at least once, and ask you the probability that I got heads both times.

You might think, if the outcome of one coin does not affect the other, it doesn’t matter if one of the coins came up heads – the probability for the other coin is still 1/2.

But that’s not right; the correct answer is 1/3. To see why, consider this:

- After I toss the coins, there are four equally likely outcomes: two heads, two tails, heads first and then tails, or tails first and then heads.

- When I tell you that I got heads at least once, I rule out one of the possibilities, two tails.

- The remaining three possibilities are still equally likely, so the probability of each is 1/3.

- In one of the remaining possibilities, the other coin is also heads.

So the conditional probability is 1/3.

If that argument doesn’t entirely convince you, there’s another way to solve problems like this, called enumeration.

Enumeration

A conditional probability has two parts: a statement and a condition. Both are claims about the world that might be true or not, but they play different roles. A conditional probability is the probability that the statement is true, given that the condition is true. In the coin toss example, the statement is “I got heads both times” and the condition is “I tossed a coin twice and got heads at least once”.

We’ve seen that it can be tricky to compute conditional probabilities, so let me suggest what I think is the most reliable way to get the right answer and be confident that it’s correct. Here are the steps:

- Make a list of equally likely outcomes,

- Select the subset where the condition is true,

- Within the subset where the condition is true, compute the fraction where the statement is also true.

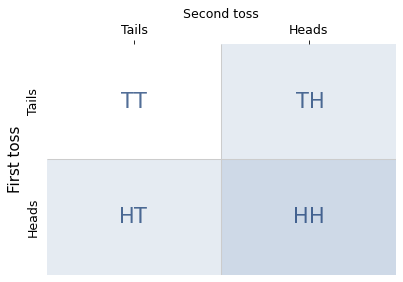

This method is called enumerating the sample space, where the “sample space” is the list of outcomes. In the coin toss example, there are four possible outcomes, as shown in the following diagram.

The shaded cells (both light and dark) show the three outcomes where the condition is true; the darker cell shows the one outcome where the statement is true. So the conditional probability is 1/3.

This example demonstrates one of the principles we’ll need to understand the puzzles: you have to count the combinations. If we know that the number of heads is either one or two, it is tempting to think these possibilities are equally likely. But there is only one way to get two heads, and there are two ways to get one heads. So the one-heads possibility is more likely.

In the next section, we’ll use this method to solve a problem involving dice. But I’ll start with a story that sets the scene.

Can We Get Serious Now?

The 2016 film Sully is based on the true story of Captain Chelsea Sullenberger, who famously and improbably landed a large passenger jet in the Hudson River near New York City, saving the lives of all 155 people on board.

In the aftermath of this emergency landing, investigators questioned his decision to ditch the airplane rather than attempt to land at one of two airports nearby. To demonstrate that these alternatives were feasible, they showed simulations of pilots landing successfully at both airports.

In the movie version of the hearing, Tom Hanks, who played Captain Sullenberger, memorably asks, “Can we get serious now?” Having seen the simulations, he says, “I’d like to know how many times the pilot practiced that maneuver before he actually pulled it off. Please ask how many practice runs they had.”

One of the investigators replies, “Seventeen. The pilot […] had seventeen practice attempts before the simulation we just witnessed.” And the audience gasps.

Of course this scene is fictionalized, but the logic of this exchange is consistent with the actual investigation. It is also consistent with the laws of probability.

If someone accomplishes an unlikely feat, you are right to suspect it was not their first try. And the more unlikely the feat, the more attempts you might guess they made.

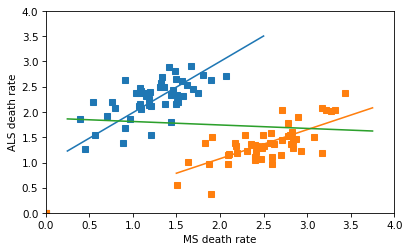

I will demonstrate this point with coins and dice. Suppose I toss a coin and, based on the outcome, roll a die either once or twice. I don’t let you see the coin or the die, and you don’t know how many times I rolled, but I report that I rolled at least one six. Which do you think is more likely, that I rolled once or twice?

You might suspect that I rolled twice – and this time your intuition is correct. If I get a six, it is more likely that I rolled twice.

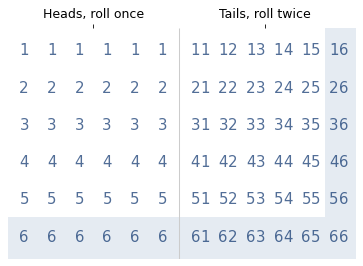

To see how much more likely, let’s enumerate the possibilities. The following diagram shows 72 equally likely outcomes.

The left side shows 36 cases where I roll the die once, using a single digit to represent the outcomes. The right side shows 36 cases where I roll the die twice: the first digit represents the first roll; the second digit represents the second roll.

The shaded area indicates the outcomes where at least one die is a six. There are 17 in total, 6 when I roll the die once and 11 when I roll it twice. So if I tell you I rolled at least one six, the probability is 11/17 that I rolled the die twice, which is about 65%.

If you succeed at something difficult, it is likely you had more than one chance.

The Two Child Problems

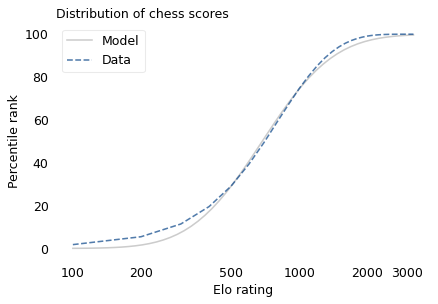

Next we’ll solve two puzzles made famous by Martin Gardner in his Scientific American column in 1959. He posed the first like this:

Mr. Jones has two children. The older child is a girl. What is the probability that both children are girls?

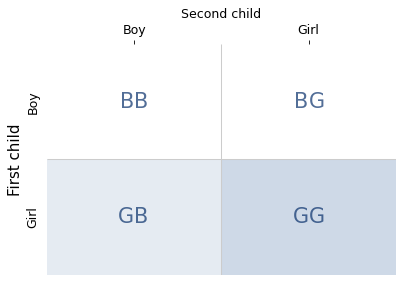

The real world is complicated, so let’s assume that these problems are set in a world where all children are boys or girls with equal probability. With that simplification, there are four equally likely combinations of two children, shown in the following diagram.

The shaded areas show the families where the condition is true – that is, the first child is a girl. The darker area shows the only family where the statement is true – that is, both children are girls.

There are two possibilities where the condition is true and one of them where the statement is true, so the conditional probability is 1/2. This result confirms what you might have suspected: the sex of the older child is irrelevant. The probability that the second child is a girl is 1/2, regardless.

Now here’s the second problem, which I have revised to make it easier to compare with the first part:

Mr. Smith has two children. At least one of them is a [girl]. What is the probability that both children are [girls]?

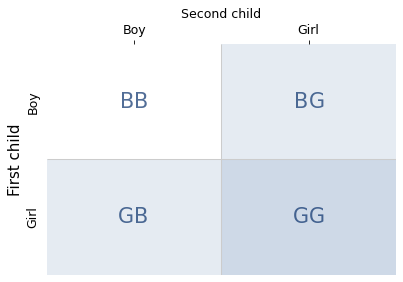

Again, there are four equally likely combinations of two children, shown in the following diagram.

Now there are three possibilities where the condition is true – that is, at least one child is a girl. In one of them, the statement is true – that is, both children are girls. So the conditional probability is 1/3.

This problem is identical to the coin example, and it demonstrates the same principle: you have to count the combinations. There is only one way to have two girls, but there are two ways to have a boy and a girl.

More Variations

Now let’s consider a series of related questions where:

- One of the children is a girl born on Saturday,

- One of the children is a left-handed girl, and finally

- One of the children is a girl named Florida.

To avoid real-world complications, let’s assume:

- Children are equally likely to be born on any day of the week.

- One child in 10 is left-handed.

- One child out of 1000 is named Florida.

- Children are independent of one other in the sense that the attributes of one (birth day, handedness, and name) don’t affect the attributes of the others.

Let’s also assume that “one of the children” means at least one, so a family could have two girls born on Saturday, or even two girls named Florida.

Saturday’s Child

In a family with two children, what are the chances, if one of the children is a girl born on Saturday, that both children are girls?

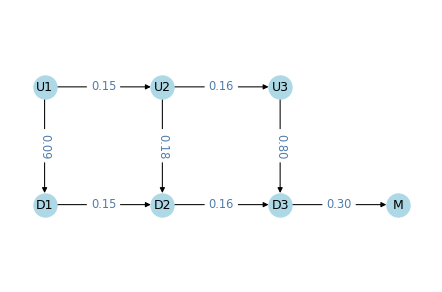

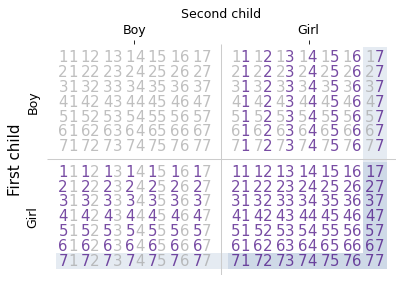

To answer this question, we’ll divide each of the four boy-girl combinations into 49 day-of-the-week combinations.

I’ll represent days with the digits 1 through 7, with 1 for Sunday and 7 for Saturday. And I’ll represent families with two digit numbers; for example, the number 17 represents a family where the first child was born on Sunday and the second child was born on Saturday.

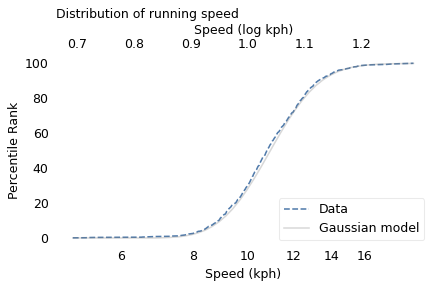

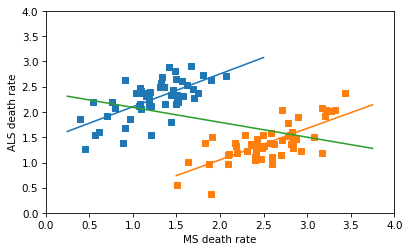

The following diagram shows the four possible orders for boys and girls, and within them, the 49 possible orders for days of the week.

The shaded area shows the possibilities where the condition is true – that is, at least one of the children is a girl born on a Saturday. And the darker area shows the possibilities where the statement is true – that is, both children are girls.

There are 27 cases where the condition is true. In 13 of them, the statement is true, so the conditional probability is 13/27, which is about 48%.

So the day of the week is not irrelevant. If at least one child is a girl, the probability of two girls is about 33%. If at least one child is a girl born on Saturday, the probability of two girls is about 48%.

Now let’s see what happens if the girl is left-handed.

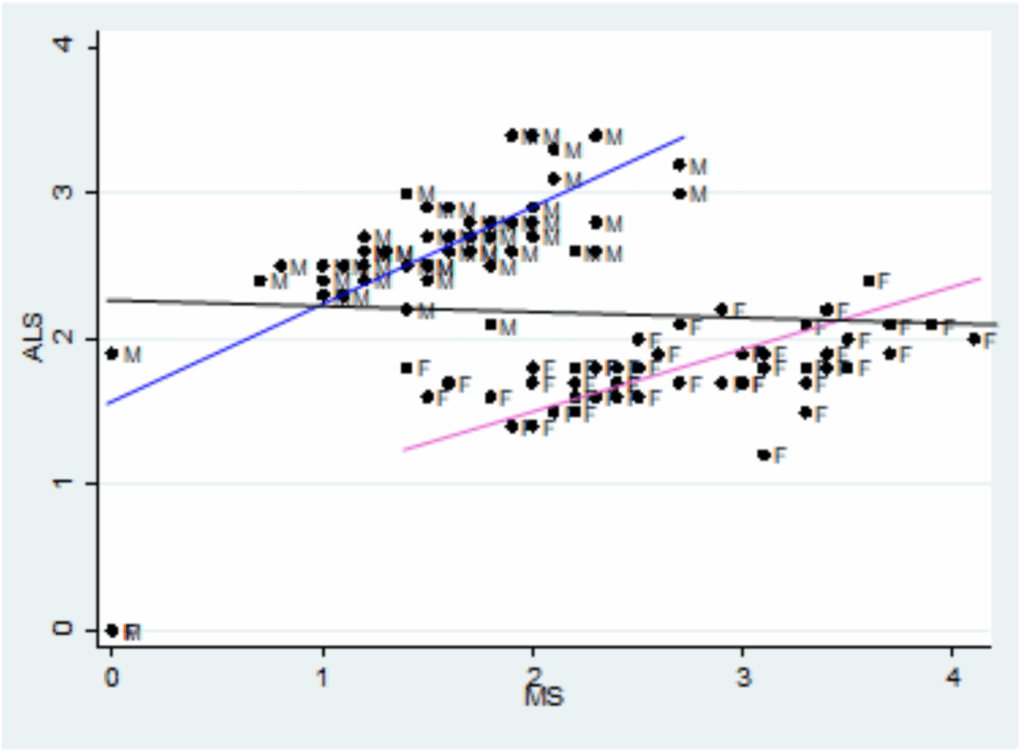

Left-handed girl

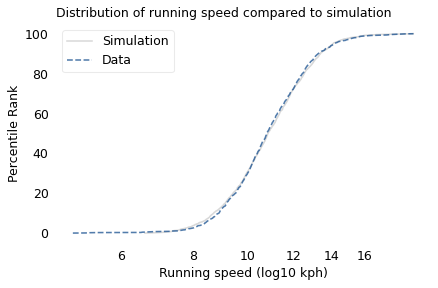

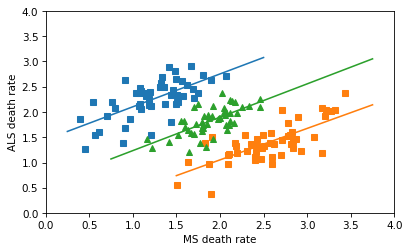

In a family with two children, what are the chances, if one of the children is a left-handed girl, that both children are girls?

Let’s assume that 1 child in 10 is left-handed, and if one sibling is left-handed, it doesn’t change the probability that the other is.

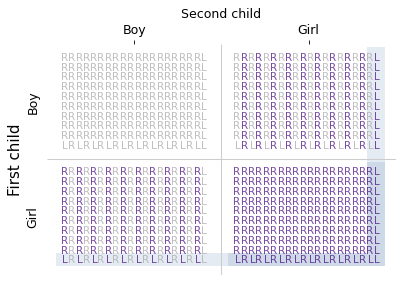

The following diagram shows the possible combinations, using “R” to represent a right-handed child and “L” to represent a left-handed child. Again, the shaded areas show where the condition is true; the darker area shows where the statement is true.

There are 39 combinations where at least one child is a left-handed girl. Of them, there are 19 combinations where both children are girls. So the conditional probability is 19/39, which is about 49%.

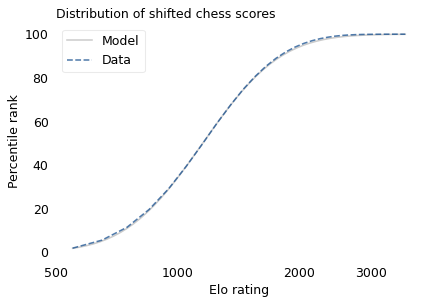

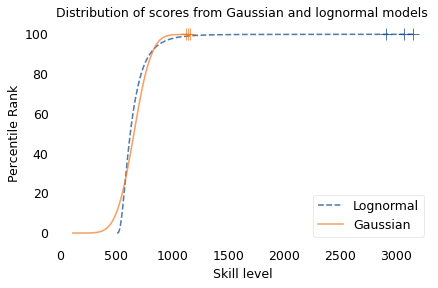

Now we’re starting to see a pattern. If the probability of a particular attribute, like birthday or handedness, is 1 in n, the number of cases where the condition is true is 4n-1 and the number of cases where the statement is true is 2n-1. So the conditional probability is (2n-1) / (4n-1).

Looking at the diagram, we can see where the terms in this expression come from. The multiple of four represents the segments of the L-shaped region where the condition is true; the multiple of two represents the segments where the statement is true. And we subtract one from the numerator and denominator so we don’t count the case in the lower-right corner twice.

In the days-of-the-week example, n is 7 and the conditional probability is 13/27, about 48%. In the handedness example, n is 10 and the conditional probability is 19/39, about 49%. And for the girl named Florida, who is 1 in 1000, the conditional probability is 1999/3999, about 49.99%. As n increases, the conditional probability approaches 1/2.

Going in the other direction, if we choose an attribute that’s more common, like 1 in 2, the conditional probability is 3/7, around 43%. And if we choose an attribute that everyone has, n is 1 and the conditional probability is 1/3.

In general for problems like these, the answer is between 1/3 and 1/2, closer to 1/3 if the attribute is common, and closer to 1/2 if it is rare.

But Why?

At this point I hope you are satisfied that the answers we calculated are correct. Enumerating the sample space, as we did, is a reliable way to compute conditional probabilities. But it might not be clear why additional information, like the name of a child or the day they were born, is relevant to the probability that a family has two girls.

The key is to remember what we learned from Sully: if you succeed at something improbable, you probably made more than one attempt.

If a family has a girl born on Saturday, they have done something moderately improbable, which suggests that they had more than one chance, that is, more than one girl. If they have a girl named Florida, which is more improbable, it is even more likely that they have two girls.

These problems seem paradoxical because we have a strong intuition that the additional information is irrelevant. The resolution of the paradox is that our intuition is wrong. In these examples, names and birthdays are relevant because they make the condition of the conditional probability more strict. And if you meet a strict condition, it is likely that you had more than one chance.

Be Careful What You Ask For

When I first wrote about these problems in 2011, a reader objected that the wording of the questions is ambiguous. For example, here’s Gardner’s version again (with my revision):

Mr. Smith has two children. At least one of them is a [girl]. What is the probability that both children are [girls]?

And here is the objection:

- If we pick a family at random, ask if they have a girl, and learn that they do, the probability is 1/3 that the family has two girls.

- But if we pick a family at random, choose one of the children at random, and find that she’s a girl, the probability is 1/2 that the family has two girls.

In either case, we know that the family has at least one girl, but the answer depends on how we came to know that.

I am sympathetic to this objection, up to a point. Yes, the question is ambiguous, but natural language is almost always ambiguous. As readers, we have to make assumptions about the author’s intent.

If I tell you that a family has at least one girl, without specifying how I came to know it, it’s reasonable to assume that all families with a girl are equally likely. I think that’s the natural interpretation of the question and, based on the answers Gardner and Mlodinow provide, that’s the interpretation they intended.

I offered this explanation to the reader who objected, but he was not satisfied. He replied at length, writing almost 4000 words about this problem, which is longer than this chapter. Sadly, we were not able to resolve our differences.

But this exchange helped me understand the difficulty of explaining this problem clearly, which helped when I wrote this chapter. So, if you think I succeeded, it’s probably because I had more than one chance.