Factors of Underrepresentation

I recently encountered a 2009 paper by Ceci, Williams, and Barnett, “Women’s underrepresentation in science: sociocultural and biological considerations”, which lists in the abstract these “factors unique to underrepresentation [of women] in math-intensive fields”:

(a) Math-proficient women disproportionately prefer careers in non–math-intensive fields and are more likely to leave math-intensive careers as they advance;

(b) more men than women score in the extreme math-proficient range on gatekeeper tests, such as the SAT Mathematics and the Graduate Record Examinations Quantitative Reasoning sections;

(c) women with high math competence are disproportionately more likely to have high verbal competence, allowing greater choice of professions; and

(d) in some math-intensive fields, women with children are penalized in promotion rates.

To people familiar with this area of research, none of these are surprising, but the third caught my attention because I recently looked at the correlation between math and verbal scores on the SAT and ACT. In general, they are highly correlated, with r around 0.7, and they are equally correlated for men and women. So I was curious to know where this claim comes from and, if it is true, how big a factor it might be.

As evidence, Ceci et al. summarize results from “a tracking study of 1,100 high-mathematics aptitude students who expressed a goal of majoring in mathematics or science in college”, which found:

One determinant of who switched out of math/science fields was the asymmetry between their verbal and mathematics abilities. Women’s verbal abilities on average were nearly as strong as their mathematics abilities (only 61 points difference between their SAT-V and SAT-M), leading them to enter professions that prized verbal reasoning (e.g., law), whereas men’s verbal abilities were an average of 115 points lower than their mathematics ability, possibly leading them to view mathematics as their only strength.”

And they cite Achter, Lubinski, Benbow, & Eftekhari-Sanjani, 1999 and Wai, Lubinski, & Benbow, 2005.

I don’t have access to that dataset, but I ran a similar analysis with data from the National Longitudinal Survey of Youth 1997 (NLSY97), which “follows the lives of a sample of [8,984] American youth born between 1980-84”. The public data set includes the participants’ scores on several standardized tests, including the SAT and ACT. Assuming that most participants took these exams when they were 17, they probably took them between 1997 and 2001.

I found that the pattern described by Ceci et al. also appears in this dataset. Although the correlation between math and verbal scores is the same for men and women, the slope of the regression line is not. In a group of male and female test-takers with the same math score, the verbal scores for the female test-takers are higher, on average. Near the high end of the range, the difference is about 35 points, which is a little smaller than the difference in the previous study, 54 points.

So we might ask:

- Is this a big enough difference that it seems likely to affect career choices? For example, suppose Student A has scores M 750 V 660 and Student B has scores M 750 V 690. Would A be substantially more likely than B to “view mathematics as their only strength”?

- If we assume that the answer is yes, and that both students make career choices accordingly, how big an effect would this have on the sex ratios we see in math-intensive fields?

I don’t have the data to answer the first question, but we can use the data we have, and a model of the filtering processes, to put an upper bound on the second.

To summarize the results, the largest effect I found for factor (c) is that it might increase the sex ratio in a math-intensive field by 7-14%. For example, if the requirement for a math-intensive job is 700 or more on the SAT math section, the sex ratio among the people who meet this requirement is 1.8. Now suppose that everyone who meets this standard takes a math-intensive job, EXCEPT the people who also get 700 or more on the verbal section. If all of those people choose a different career, the sex ratio of the ones left in the math-intensive job goes up to 2.0. In this part of the range, the effect of factor (c) is non-negligible.

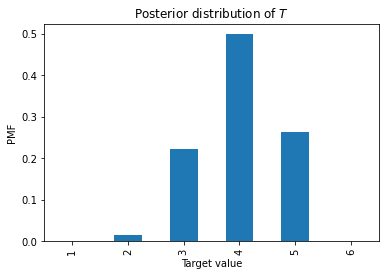

To see what happens as we move farther into the tail, I used the NLSY data to create a Gaussian model, and used the model to simulate test scores beyond the range of the SAT. With this model, we see that the effect of factor (b) increases as we make the requirements stricter.

For example, if the threshold score is 800 for the math and verbal sections, the sex ratio among the people who meet the math requirement is 4.6. If the people who meed the verbal requirement choose different careers, the sex ratio among the people left behind is 4.9 (an increase of about 7%). So it seems like the effect of factor (c) gets smaller as we go farther into the tails of the distributions.

Finally, I use the model to decompose two parts of factor (b), the difference in means and the difference in variance. When the threshold is 800, the contribution of these two parts is about equal; that is:

- If we set the means to be the same, but preserve the difference in variance, the sex ratio among people who meet the math requirement is about 2.2.

- If we set the variances to be the same, but preserve the difference in means, the sex ratio among people who meet the math requirement is about 2.2.

In summary:

- In a simple model, the effect of factor (c) is modest; in reality, it is likely to be smaller.

- In the same model, the effect of factor (b) is substantial, and can be decomposed into roughly equal contributions from differences in means and variances.

The details of this analysis are in this Jupyter notebook, which you can run on Colab.